How stereo VR works with the human brain

Our expert dev, Sandi, has been looking at how VR180 stereo footage works with the brain, focusing on how stereo offset relates to perceived scale. His findings help explain why objects that are shot closer to the lens seem larger in VR when using the Canon dual fisheye camera. Read on for the full explanation.

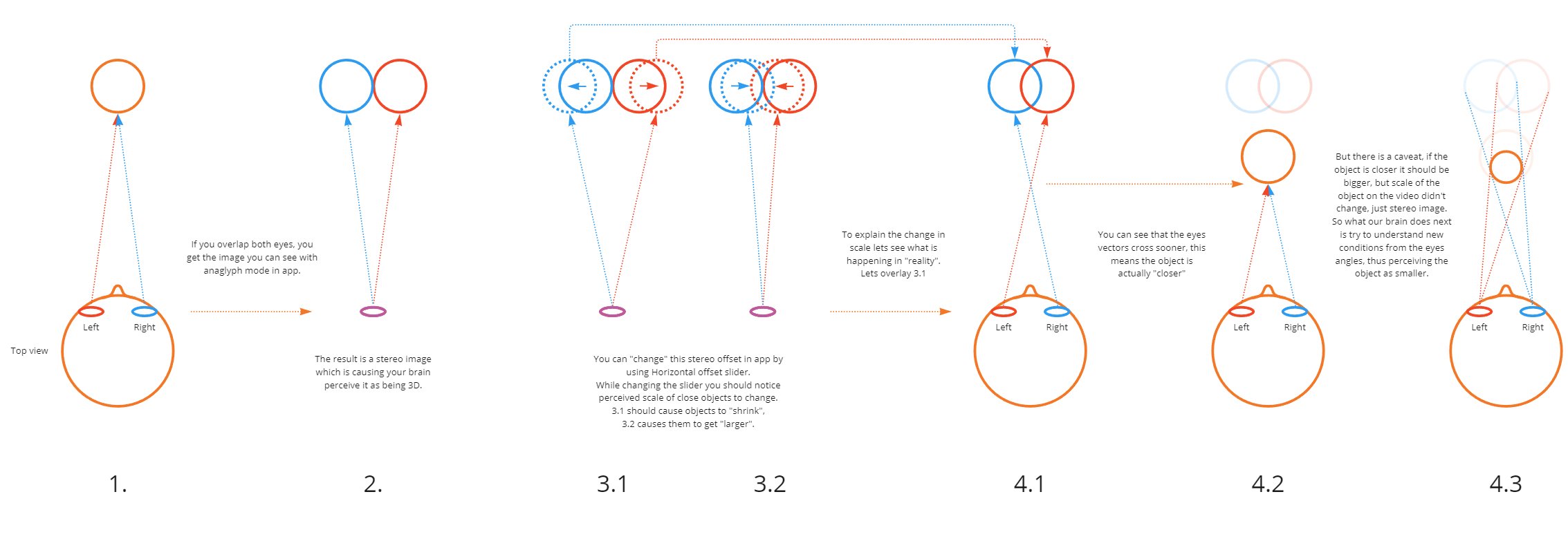

Firstly, see the infographic below which shows how the eyes look at an image in stereo, and how this is employed in virtual reality to create the sense of a three-dimensional image. (Or you can see the infographic on Miro here.)

If you want to test this effect, open any passthrough video (or video with a flat background) and try moving the "Horizontal offset" bar to a negative value. You should see the subject becoming "smaller".

A related effect to this is zooming. When a subject is close to the camera, try zooming out. You’ll notice closer objects move more slowly into the distance than the background. The reason why it appears to stay closer is due to the larger stereo separation.

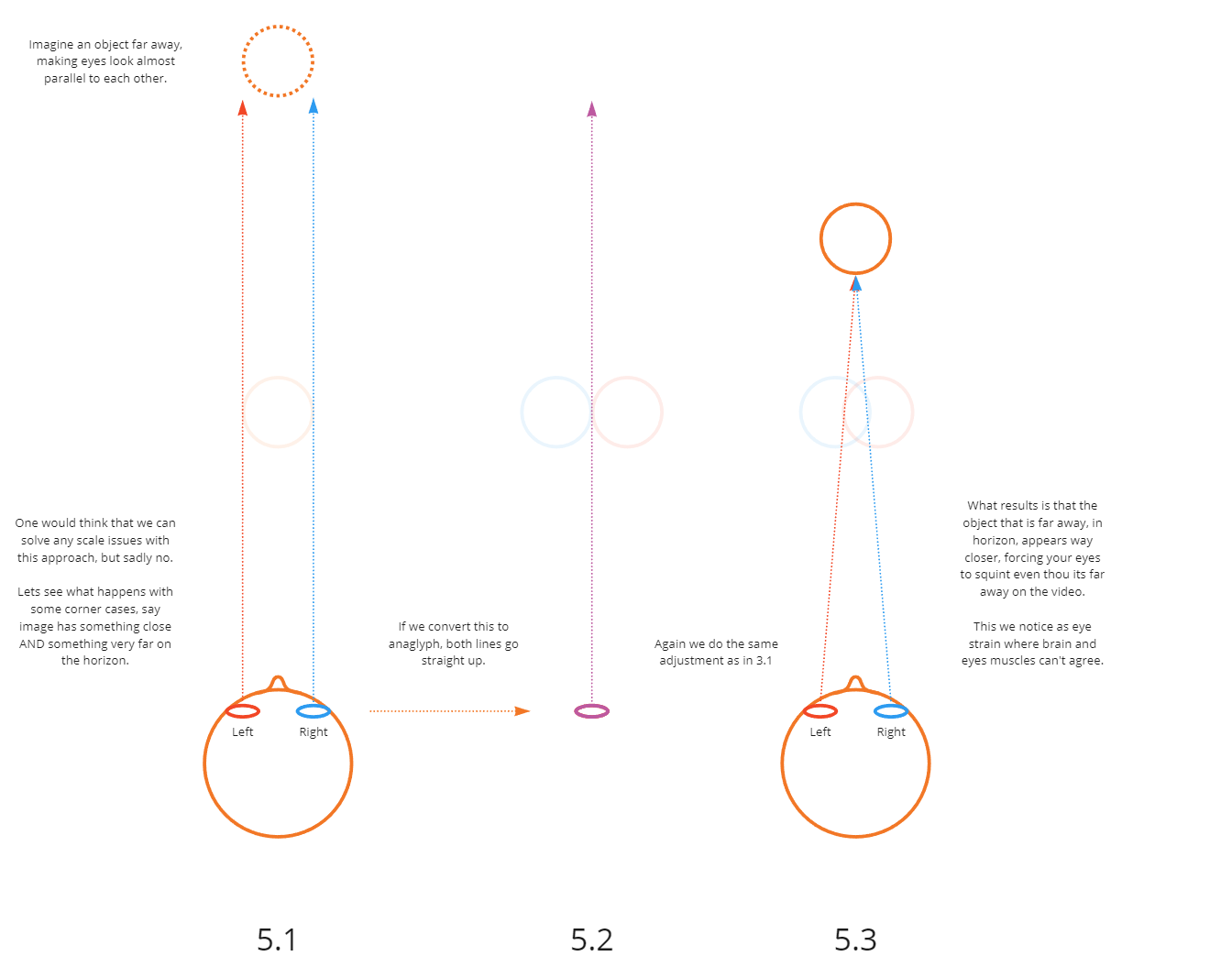

The second part of the image can be viewed below (or go here to see on Miro). This attempts to explain the limits of the Canon dual fisheye camera and its 60mm lens separation - you’ll see why we can't force any major scaling changes by manipulating the stereo image in post-production.

This is a starting point for discussion and we’d love to hear your thoughts. You can join in the discussion over on our busy friendly forum here.